As a quick follow-up to my last post on the midpoint review of M6 competition, I have looked into the actual performance statistics of my entries in the first half of the competition.

The results are suprising in a few aspects :

- The results exhibit huge fluctuations from month to month. (Perhaps partially reflecting the current uncertain and volatile market conditions.)

- My forecasting results are better than my own initial expectation, beating the benchmark (i.e. 0.16) in most months. (Something happened in Mar & Apr 2022 that I will explain below.)

- My investment decision results are bad as expected, due to the conservative strategy that I have used.

| Submission Number | Date | Overall Rank | Performance (Forecasts) | Rank (Forecasts) | Performance (Decisions) | Rank (Decisions) |

|---|---|---|---|---|---|---|

| 1st | Feb 2022 | 36.5 | 0.15984 | 46 | 4.28492 | 27 |

| 2nd | Mar 2022 | 111.5 | 0.16619 | 92 | -6.08808 | 131 |

| 3rd | Apr 2022 | 115.5 | 0.16132 | 109 | 0.04113 | 122 |

| 4th | May 2022 | 64 | 0.15949 | 60 | -1.96181 | 68 |

| 5th | June 2022 | 65 | 0.15294 | 6 | -0.7612 | 124 |

| 6th | July 2022 | 18 | 0.14366 | 1 | 3.79556 | 35 |

Huge fluctuations in results

The fluctuations in results for both the forecasting and investment decision categories are huge, changing quite wildly from month to month despite (mostly) the same approaches being used throughout the period.

This can be explained by the widely known low signal-to-noise ratio phenomenon observed in stock markets, which makes forecasting and investment decision difficult problems to solve.

However, the stock markets in the first half of 2022 are also more volatile than usual, due to a combination of unknown factors at work (including pandemic, war, recession and inflation). One example of this can be seen in the elevated levels of the CBOE Volatility Index (VIX), which roughly tracks the “fear” in the S&P500 index.

Forecasting results are better than my own expectations

Out of the previous 5 months, my forecasting submission was able to beat the benchmark in 3 out of 5 months (i.e. to be lower than 0.16). The benchmark is at 0.16, which means one assumes no knowledge of the future ranked returns of each stock.

Of the 2 months that I failed to beat the benchmark, actually something happened behind the scene. It mostly happened because I lack the time to properly vet and test the solution. For the submissions in Mar and Apr 2022, my algorithm spat out results that I uploaded as usual. But I noticed my performance degraded severely in those 2 months, so I did a few changes to the algorithm:

- Setup unit tests around a few of the critical functions

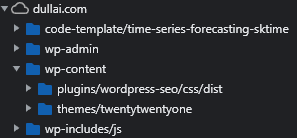

- Checked data sources in detail to ensure quality and remove any low-quality data sources as inputs

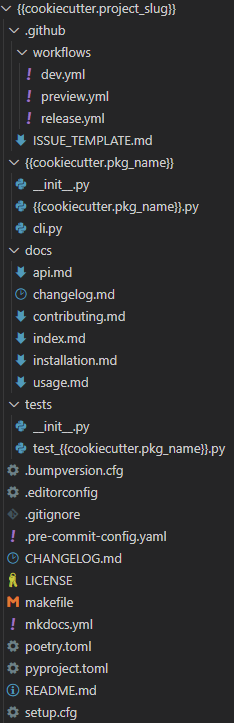

- Re-written my own forecasting module, casting away a pre-built pipeline that I used from a library

- Added diagnostic checks around the models that I trained each time to ensure that there was nothing off during the training process

A funny side story. It was after I managed to add the diagnostic checks that I noticed the models I trained in Mar and Apr 2022 cannot distinguish between the worst and best-performing stocks effectively (i.e. almost interchangeably).

Even now, I still do not know what is the exact cause (if any), or if my algorithm is mostly bug-free now, or even if my current result is just a fluke.

Investment decision results are as bad as expected

My investment decision results are as bad as expected.

Firstly I spent the least time on this part, without setting up a proper framework (i.e. backtesting integration) even now. Secondly and partially because of the first reason, I am running my investment using a very traditional and conservative approach which I will not name now as the competition is still ongoing.

Compared to the forecasting component, my investment decision component is mostly so simple that anyone can replicate it in an Excel file. The only more complex part is the portfolio allocation algorithm. I have a simple portfolio allocation algorithm written to manage risks, but it is very trivial that doesn’t worth any additional special mention.

As a fun fact, I did a drastic change to my investment strategy as of the 6th submission. It would be interesting to see how it turns out.

Ending

While it feels nervous posting this performance summary when I realised the results for my latest (best so far) 6th submission is still pending, I still decided to share it for now just to stick to my blog post schedule.

Hopefully you get something out of this post, and I hope that this will not be laughed at as an example of pre-mature celebration. Let’s see how things go for the remaining half of this competition!